YouGov experiments highlight the importance of question framing to properly measuring public opinion

Questionnaire design and survey methodology have been something of a hot topic lately among public opinion experts, observers, and commentators on social media.

Specifically, some recent published results by other polling firms have brought up discussions regarding the importance of using balanced, neutral wording in question framing and introductions, as well as the appropriateness of agree/disagree scales in measuring public support or opposition to specific policies and proposals.

Now, new YouGov analysis of survey experiments conducted in recent weeks highlights two of the main concerns in contemporary survey design and how they can affect our measurements of public opinion:

- Leading respondents to a particular answer by offering the respondent a reason to support one side of the argument, and not the other, in the question wording itself.

- The introduction of acquiescence bias into results by using an agree/disagree answer scale to measure support or opposition to a given position or policy.

A crude example of a leading question might be “To what extent do you believe that this current corrupt Conservative government is doing a bad job?”, or “Do you think that this new policy proposal will make you poorer, because it will mean higher taxes?”.

Neither of these would be considered acceptable measurements of public opinion. Instead, they would massively overinflate the extent to which people, or the public, actually hold those respective position by not presenting the options (or the debate) fairly.

Meanwhile, acquiescence bias comes into play every time when survey respondents are asked questions which use an ‘agree-disagree’ framing to measure their response. Examples would include things along the lines of “To what extent do you agree or disagree with the government’s decision to introduce a new fast track system for refugee applications?”, or “Do you agree or disagree that the current voting system is unfair”.

The problem with questions like this, it is argued, is that using agree/disagree scales falsely inflates support for a given position or proposal, because people have more tendency to agree with a given suggestion than to disagree with it – particularly if they have low prior information or no strongly held opinion on the topic at hand.

But to what extent do these biases really effect polling results? Can we put a number on how much introducing each of acquiescence bias and respondent leading bias might impact findings in contemporary survey research? How should we treat published results which might be found wanting on either (or both of) these counts? Analysis of two new survey experiments ran by YouGov shows us just how serious the problem can be, and highlight the importance of constant rigour and vigilant upholding of standards in questionnaire design.

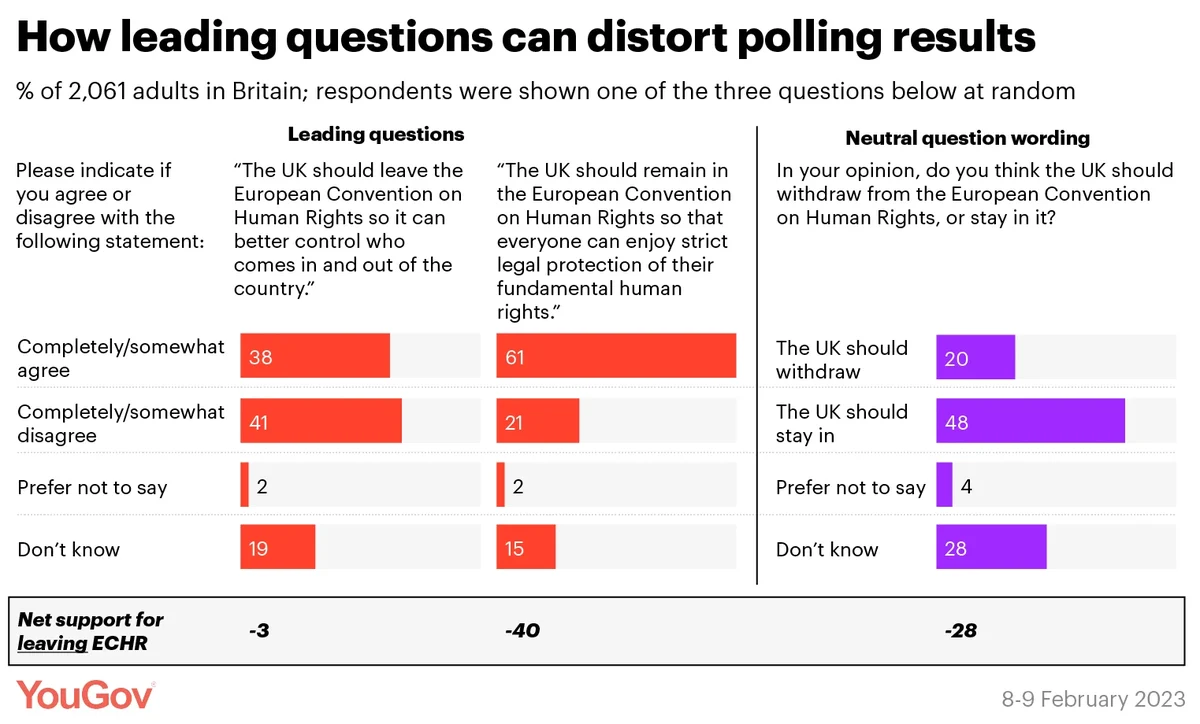

Experiment 1 – Leading respondents in the context of the European Convention on Human Rights

Our first experiment split takes the case of the European Convention on Human Rights (hereafter, ECHR), repeating a problematic question design we saw recently circulating on social media and then examining the outcomes versus alternative measurements. In the experiment, we sent respondents from the same survey down one of three random routes, each containing just one question on the topic. Each respondent saw only one of the three possible questions.

This approach, sometimes called “A/B testing” or “randomised control trials” is a powerful tool we have at our disposal for testing how different respondents from the same survey will react to different framing, arguments, pre-question materials, and of course questionnaire design. Running the experiment in this way, rather than running separate surveys at different times, minimises the risk of external factors – e.g. events in the news cycle – interfering with the results, allowing for greater certainty that the factors we are testing are alone responsible for any differences we see in results.

The topic of the ECHR is an example of one where the average respondent will not have a particularly strong opinion or prior view – it is low salience, low (voter) priority, and a politically and legally complicated issue.

The question within the first split in our experiment replicated exactly the wording and answer categories from the recently published ECHR polling: Please indicate if you agree or disagree with the following statement: “The UK should leave the European Convention on Human Rights so that it can better control who comes in and out of the country.”

This question produced a very split result: 38% agreed with the suggestion, and 41% disagreed, for a net level of supporting leaving the convention of -3. This did not quite replicate the previous polling we had seen on the topic, but showed nonetheless a fairly high level of support for leaving.

The second route offered an equally biased question framing but on the opposite side of the argument: “The UK should remain in the European Convention on Human Rights so that everyone can enjoy strict legal protection of their fundamental human rights”.

Using this wording changed the results dramatically, with 61% agreeing with the suggestion (and thus supporting staying in the convention) while 21% disagreed (thus supporting leaving the convention), for a net level of supporting leaving the convention of -40. This represents a full 37-point swing relative to the other question.

Finally, a neutral question seen by the last random third asked “In your opinion, do you think the UK should withdraw from the European Convention on Human Rights, or stay in it?”. Using this framing, we found 20% of Britons in support for leaving the convention, with 48% in favour of staying in it, putting net support for withdrawing at -28.

Between the three measurements, we can see a clear pattern – it is possible to totally distort and bias the results simply by leading the respondent to a particular answer. The first question, with its leading wording in favour of quitting the ECHR, showed an 18pt difference in the number of people wanting to leave from the neutral question, while the question with leading wording in favour of staying in the ECHR showed a 13pt deviation.

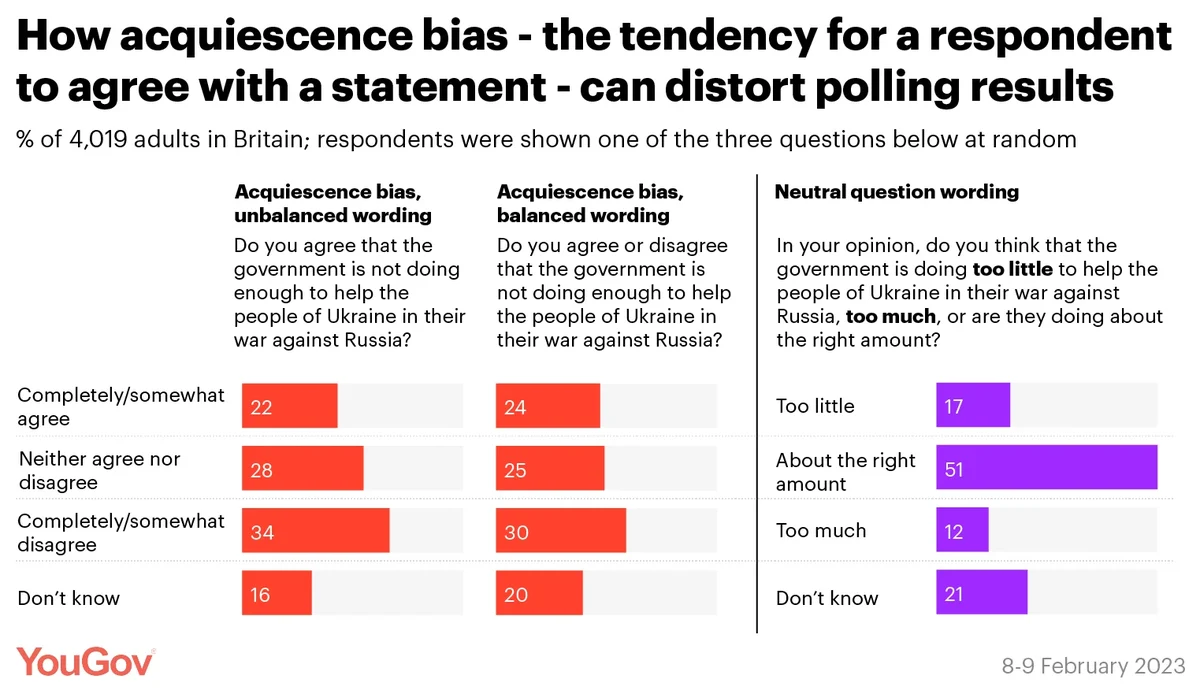

Experiment 2 – Acquiescence bias in measuring support for Ukraine

To test the effect of acquiescence bias directly, a second experiment (on a separate survey) split respondents down another three random routes, this time showing different questions on the topic of the Russia-Ukraine war.

Our measurement this time was centred on what percentage of the public believed the government should be doing more to help the people of Ukraine.

This is an example of a different type of topic, one on which public views are much stronger-formed, and theoretically less manipulable by question wording or framing. For instance, while we typically see levels of around a quarter of the public suggesting they “don’t know” whether the country should stay in or withdraw from the ECHR, only around one in six “don’t know” if Britain ought to supply fighter jets to Ukraine.

The first random route a respondent could have gone down contained the following question: “Do you agree that the government is not doing enough to help the people of Ukraine in their war against Russia?”. This is an example of an unbalanced agree/disagree statement – that is to say, the question text asks only about ‘agreement’ but not ‘disagreement’. Using this wording, we found 22% agreeing with the suggestion that the government was not doing enough to help.

The second random route used a balanced agree/disagree statement, using the same question text but also including “or disagree”. This small change in the framing reduced an equally negligible change in the results, well within the range of statistical uncertainty, with 24% agreeing that the government ought to do more.

Finally, when we move away from the agree/disagree framing altogether, we can see the effects of acquiescence bias. When asked “In your opinion, do you think that the government is doing too little to help the people of Ukraine in their war against Russia, too much, or are they doing about the right amount?”, we measured just 17% for “too little”. That constitutes a five point drop versus the unbalanced agree/disagree question, and a seven point drop on the balanced scale.

The results confirm that acquiescence bias is a factor, albeit not to anywhere near the same extent as we measured leading bias above.

The conclusions from these survey experiments are quite clear – it is very much the case that poorly designed survey instruments can distort results and produce measurements of public opinion which are inaccurate at best and completely misleading at worst. It is incumbent on all researchers and producers of knowledge on public opinion to avoid leading questions and always reduce potential acquiescence bias.