Our new sampling methods have notably increased the accuracy of our results in the first real-time test since May 2015

A year ago the polls got the general election wrong. This week YouGov was the only company to carry out polls for all three of the London, Scottish and Welsh votes. Following both our own internal investigation and the British Polling Council enquiry, we have changed our sampling so as to more accurately reflect the make-up of the electorate.

Based on the first real-time test of these new approaches that yesterday’s elections offered, it is clear that our new sampling methods have notably increased the accuracy of our results.

Scotland and Wales

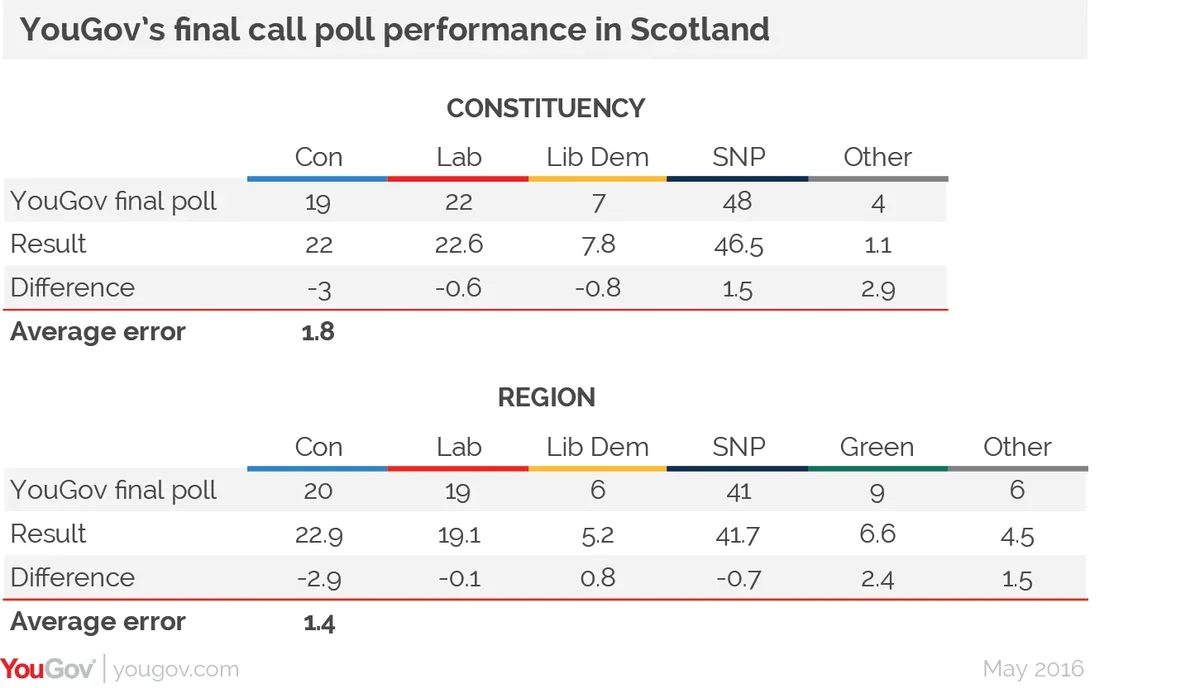

In Scotland we showed the SNP far ahead, but falling back in the regional vote, and were the first company to show the Scottish Conservatives advancing and overtaking Labour in the regional vote. The average error in our final poll was 1.8% in the constituency vote, 1.4% in the regional vote, comfortably within the margin of error.

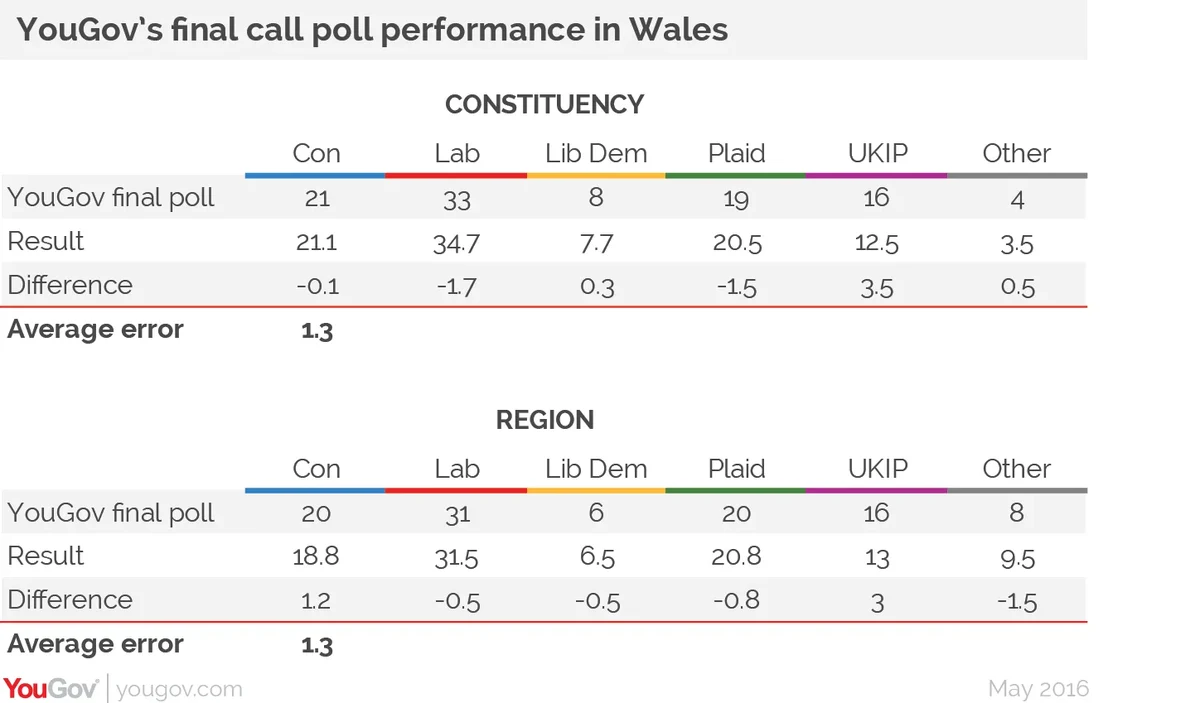

In Wales, we were the only company to conduct polling for the Assembly election. Here, our final call figures had an average error of only 1.3% in both the constituency and regional vote.

London

In London our final poll got the scale of Sadiq Khan's victory exactly right. Our final poll showed Sadiq Khan winning by a margin of 57% to 43% on the second round, exactly the same as the final result. We marginally overestimated support for smaller parties at the expense of Zac Goldsmith in the first round.

We cannot claim the polls were perfect – they never are – in Scotland all the polls understated the Conservatives, and in Wales we overstated UKIP. However our polls this week were far more accurate than last year, and there was no sign of the skew towards Labour that marred the 2015 polls. Indeed, in every single vote, we have either got the Labour share spot-on or even understated their performance by 1-2%.

The impact of our sampling changes

Both our internal investigation and the British Polling Council’s inquiry into what went wrong found that the main problem with the 2015 polls was sampling. Polls simply interviewed the wrong people – the sort of people who took part in polls were too engaged, too interested in politics.

Rather than just deal with the symptoms of that, we have addressed the cause: we have focused on recruiting people who pay less attention to politics onto our panel. We have started sampling and weighting our polls by whether people voted at the last election, their level of education and how much attention they pay to politics.

On the basis of the results of the Scottish, Welsh and London elections, this new approach appears to be working well.

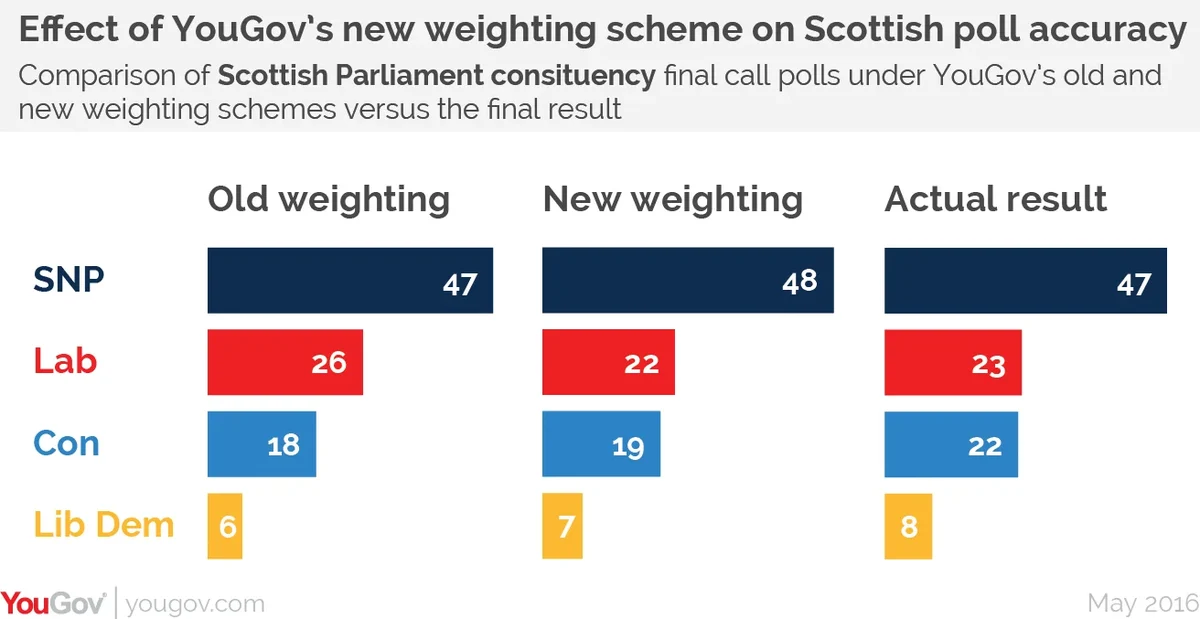

We can’t tell for sure what our figures would have been under the old methods because we can’t un-spend all the time and money we’ve invested in recruiting less engaged people to our panel. However, we can come close to it by seeing what our data would have looked like using our old weights.

Using our old method we would have shown Scottish Labour eight points ahead of the Scottish Conservatives in the constituency vote and two points ahead in the regional vote. This would have got the story wholly wrong. The changes we’ve introduced seem to have made our polls substantially more accurate.

We will continue to invest in the quality of our panel and to investigate further improvements to our methods, including how we gauge likelihood to vote and how we deal with people who say they don’t know how they will vote.

PA image