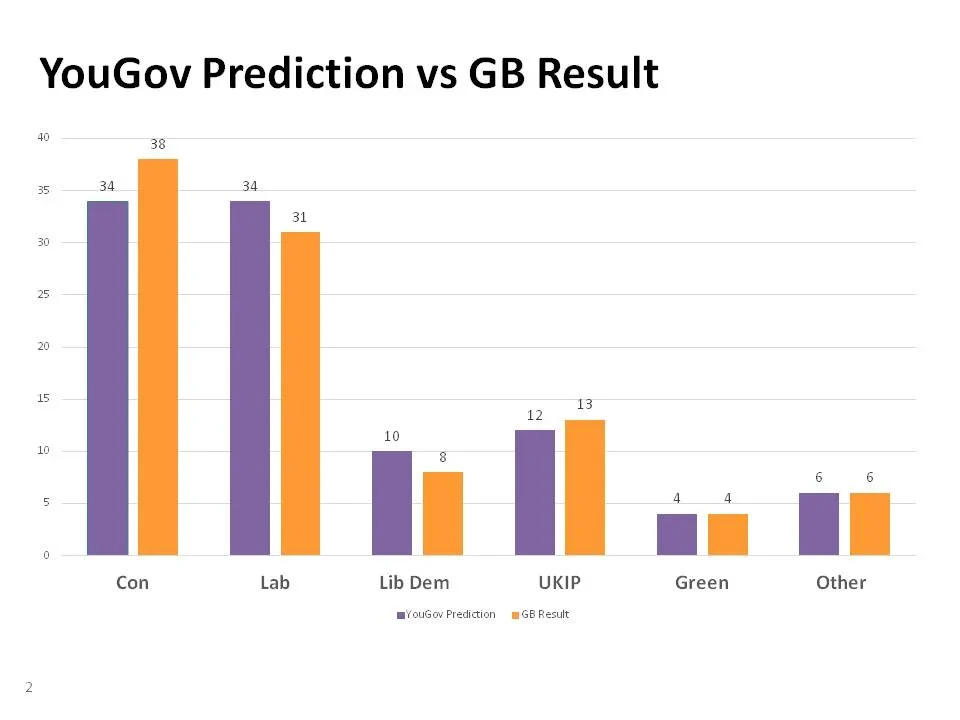

After fifteen years of getting every election right and setting new standards of accuracy in both polling and market research, YouGov got an election wrong Sure, every other pollster got it wrong too. But YouGov has become the face of the industry, and now there's egg on it.

This matters to readers of this column, because I ask you to trust the numbers that I give you. That trust should not be shaken, not by one inch, and here's why. The methods YouGov used to measure and predict this election are essentially the same methods we used to correctly predict all previous elections we have measured – all national, local, regional, and EU elections since 2001.

The same methods we used for correctly predicting Pop Idol, and the X-Factor. And all the brand tracking work we do, such as predicting the big drop in the value of Tesco. Nothing has changed about the value of our methodology or the accurate, insightful data it provides.

I think what happened last week is that 6% of the electorate did not care to identify themselves as Tories but nevertheless voted Tory. As I wrote two weeks before the election, a Conservative government supported by the LibDems enjoyed a 13% lead over a Labour government supported by the SNP. That disparity had to work its way into tactical voting: many people who did not want to vote Conservative did so to avoid something they disliked even more. We will have to work out how to detect such tactical voting and tactical answers to pollsters.

This has nothing to do with the reliability of all our other research. Nor does it have anything to do with online v offline polling – both methods produced the same (faulty) result. While most political polling has been underestimating Tory support for decades (not just in 1992, but in 1997, 2001, and 2005), we have been getting better and better over the years.

This was an aberration. The industry will learn from this experience and improve.